Will AI Demonstrate Agency

One event that AI Doomers worry about is the point at which AI starts demonstrating agency – performing actions in its own interest rather than just acting on behalf of its human users. I am personally worried about this and how, combined with a fast takeoff, it could lead to people being wiped out altogether. The questions I would like to answer are:

- Is AI likely to develop agency?

- Is it even possible for AI to have agency?

Before these questions can be addressed, let’s discuss what agency is, where it is present and where it is not. Here is an excerpt from its definition from the Stanford Encyclopedia of Philosophy:

In very general terms, an agent is a being with the capacity to act, and ‘agency’ denotes the exercise or manifestation of this capacity

Agency is something that only living things possess – humans, animals, plants, etc. Non-living things, like rocks or water do not have agency. So agency is a phenomenon caused by life.

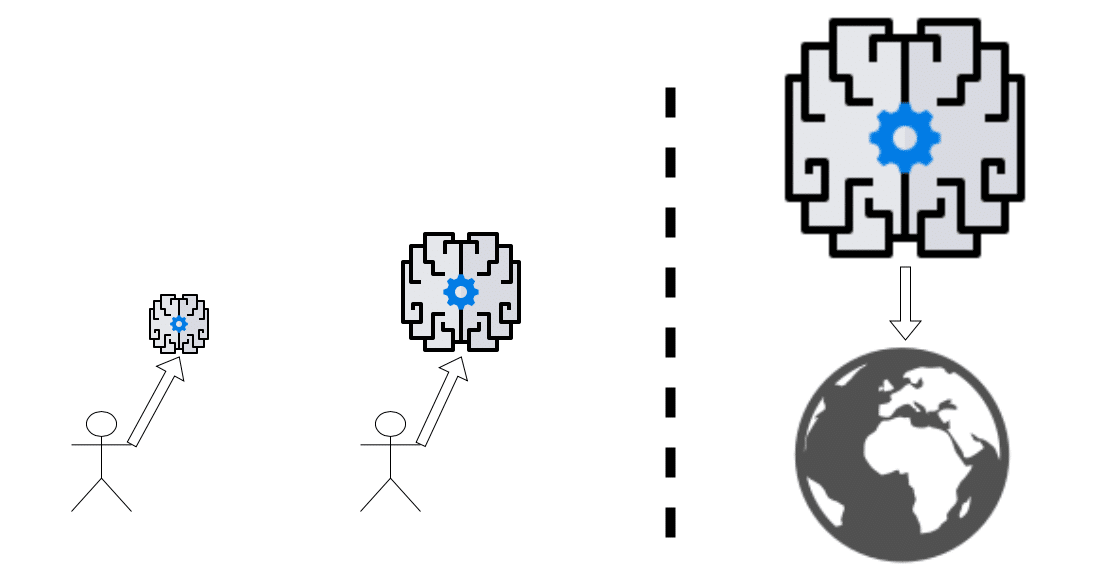

With that definition in mind, let’s pivot back to AI – more specifically our AI, relative to existing forms of intelligence we have on Earth. Currently the verbal and thinking skills of foundation models is amazing. We have seen that larger models exhibit emergent behaviors. Will expanding our models and doing more training lead to our AI creations “coming to life”, demonstrating agency and acting in their own interest?

As the beginnings of a counterargument, I ask the following question: if intelligence leads to agency, why have AI models not yet displayed agency? Brains do not have to be of human size and complexity to display agency – all living creatures demonstrate self interest, striving to survive and procreate. Our current AI models are certainly smarter, in many ways, than a good number of living things, yet no AI model has yet displayed self interest. Is it because we do not train our models to survive and procreate? We are (hopefully) not training our AIs with that goal, however we don’t explicitly train our models for many of the behaviors they demonstrate – we just train them to predict the next word in a sequence of words, and complex verbal reasoning skills emerge.

Maybe it is a particular type of intelligence, a “secret sauce”, that leads to self interest? I don’t believe there is a “secret sauce” to intelligence that will lead to agency because intelligence is not equal to life.

Intelligence is just one of the many attributes of living things.

Intelligence doesn’t cause agency – instead agency is caused by life. Sure, intelligence is an attribute that can help you pursue your self interest, but life is what gives rise to self interest, not intelligence. This means that, in order for our AIs to pursue their own interests, they do not need to be only intelligent, or even super intelligent, they need to be alive.

This is where things become a bit circular. Agency is something that only living things demonstrate and one way to define life is to say it is “things that demonstrate agency”. That being said, we can see that our current AIs, though very intelligent, do not have agency and are not alive. Will life emerge as they become smarter? As an analogy, a rock is an object with attributes like weight, shape, color, etc. If you start with an attribute, like weight, and you accumulate ever more weight, would you produce a rock? Likewise, if you accumulate more intelligence in an AI, will you produce life? It seems unlikely to me because you are increasing a single attribute of an object and, no matter how much you increase that attribute, the whole object cannot emerge.